To interpret and understand uploaded build plans, TakeoffQS uses a combination of traditional computer vision techniques, as well as state-of-the-art deep learning models.

These models are designed to handle the large amounts of variability in real-world plans, where symbols, annotations, and layouts differ significantly between projects. Rather than relying on an algorithmic, fixed rule set, our system learns patterns directly from training data and applies multiple validation steps before producing outputs.

System Overview

At a high level, TakeoffQS processes construction drawings using a multi-stage computer vision pipeline:

Each stage in the pipeline addresses a specific category of problems. Deep learning models are used where visual interpretation and ambiguity are dominant, such as recognizing symbols and structural elements. Classical computer vision and rule-based methods are then applied to enforce geometric structure, consistency, and domain constraints. This combination allows the system to remain robust across varying drawing styles while maintaining reliable and interpretable outputs.

Page Classification

One of the earliest challenges in processing construction plan sets is determining what each page actually represents. A typical set includes a mixture of architectural, structural, mechanical, and reference drawings, each with different visual elements and processing requirements.

To address this, TakeoffQS performs visual classification on each page of the build plan, using a DINOv3-based model. The model operates directly on rendered plan pages and learns high-level visual and structural representations, rather than relying on file metadata, naming conventions, or heuristic rules. This allows the system to distinguish page types based purely on visual content.

Page classification is applied early in the pipeline and is used to route pages to the appropriate downstream processing stages. This reduces unnecessary computation, limits downstream failure, and lowers the amount of manual verification required, resulting in improved overall accuracy.

Segmentation In TakeoffQS

The primary segmentation and detection layer in TakeoffQS is based on a YOLOv11 architecture. This model identifies relevant visual elements within construction drawings, including symbols and other domain-specific components, on pages that have been previously selected for processing.

The model operates on high-resolution plans and is designed to handle dense visual scenes where many elements may appear in close proximity. Its outputs serve as an intermediate representation that is refined by subsequent stages in the pipeline, rather than being treated as final results.

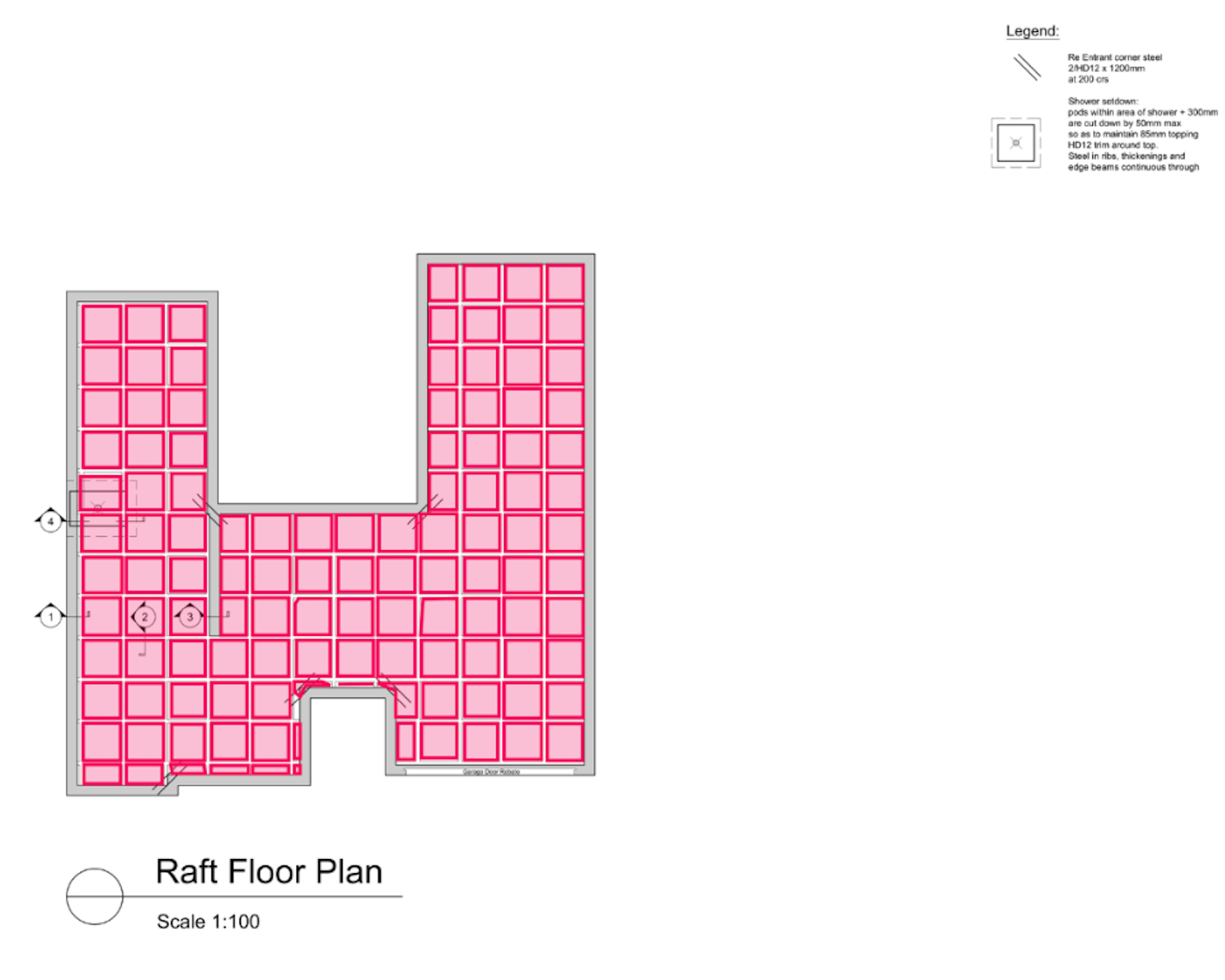

For example, on a foundation plan, the segmentation layer detects key structural elements such as pods, ribs, and beams. These elements are often tightly packed and visually similar, with differences in line weight, scale, and annotation depending on drafting standards. The model segments individual pods as repeated structural units and identifies beams as elongated connecting elements. Additional geometric algorithms and machine learning models are then applied to identify ribs and to further classify each beam type within the foundation.